Virtual fitting room An AI-driven imaging system allows users to try on virtual outfits in real time Research news

Digital mirror. A user wearing the measurement garment sees themself instead wearing a pre-selected item of digitized clothing from the store’s catalog. © 2021 Igarashi Lab

A team of researchers have created a way for people to visualize themselves wearing items of clothing they don’t have direct physical access to. The virtual try-on system uses a unique capture device and an artificial intelligence (AI)-driven way to digitize items of clothing. Using a corresponding imaging and display system, a user can then view themself on a screen wearing anything from the digital wardrobe. An AI engine synthesizes photorealistic images, allowing movement and details such as folds and ripples to be seen as if the user is really wearing the virtual clothing.

Shopping for clothes can be difficult sometimes. Often you get to the store and they’re out of the style or size of garment you intended to try on for yourself. A lot of shopping can be done online now, but shopping for clothes can be difficult as it’s very hard to judge how well something will fit you or whether you’ll like it once you see it in person. To address these issues, there have been some attempts over the years to create a sort of digital mirror that can visualize a user and superimpose an item of clothing on top of them. However, these systems lack functionality and realism.

Robot mannequin. This specially designed robot moves and reshapes itself to adjust to different clothing sizes. © 2021 Igarashi Lab

Professor Takeo Igarashi from the User Interface Research Group at the University of Tokyo and his team explore different ways humans can interact with computers. They felt they could create their own digital mirror that resolves some limitations of previous systems. Their answer is the virtual try-on system, and the team hopes it can change how we shop for clothes in the future.

“The problem of creating an accurate digital mirror is twofold,” said Igarashi. “Firstly, it’s important to model a wide range of clothes in different sizes. And secondly, it’s essential these garments can be realistically superimposed onto a video of the user. Our solution is unique in the way it works by using a bespoke robotic mannequin and a state-of-the-art AI that translates digitized clothing for visualization.”

Training models. The desired garment is moved through a range of motions so it can later be matched to the user’s own movements. © 2021 Igarashi Lab

To digitize garments, the team designed a mannequin that can move, expand, and contract in different ways to reflect different body poses and sizes. A manufacturer would need to dress this robotic mannequin in a garment, then allow it to cycle through a range of poses and sizes while cameras capture it from different angles. The captured images are fed to an AI that learns how to translate them to fit an as yet unseen user. At present the image capture for one item takes around two hours, but once someone has dressed the mannequin, the rest of the process is automated.

“Though the image capture time is only two hours, the deep-learning system we’ve made to train models for later visualization takes around two days to finish,” said graduate student Toby Chong, one of the paper’s co-authors. “However, this time will naturally decrease as computational performance increases.”

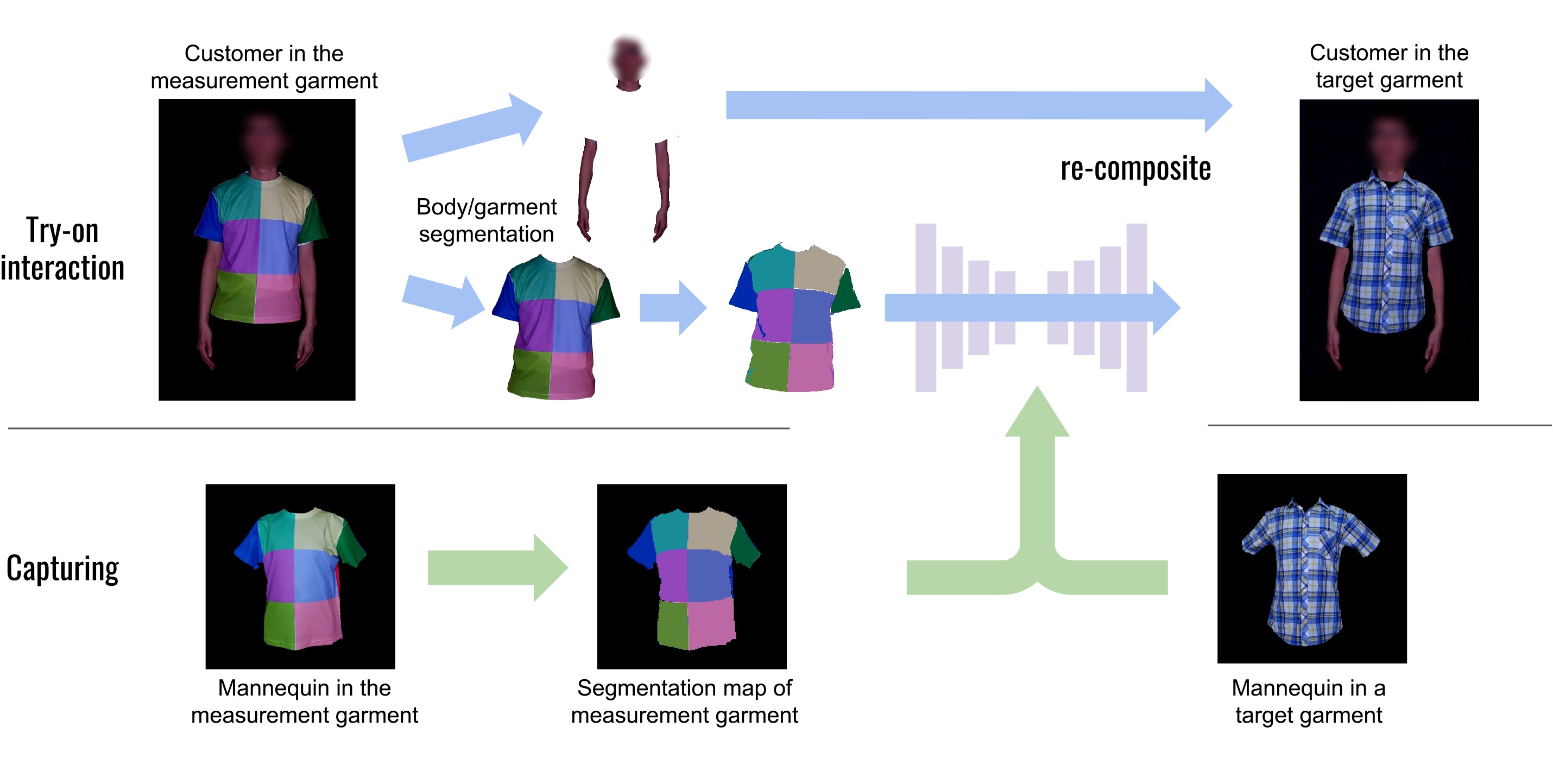

Then comes the user interaction. Someone wishing to try on different clothes would need to come to the store and stand in front of a camera and screen. They would put on a specially made outfit with an unusual pattern on it called the measurement garment. The pattern is a nonrepeating arrangement of different colored squares. The reason for this is it is easy for a computer to estimate how someone’s body is positioned in space according to how these colored squares move relative to one another. As the user moves, the computer synthesizes a plausible image of the garment that follows the user's motion.

System overview. This schematic shows the different stages of the process including capture and interaction. © 2021 Igarashi Lab

“An advantage of our system is that the virtual garment matches the motion of the measurement garment including things like wrinkles or stretching. It can look very realistic indeed,” said Igarashi. “However, there are still some issues we wish to improve. For example, at present the lighting conditions in the user’s environment need to be tightly controlled. It would be better if it could work well in less-controlled lightning situations. Also, there are some visual artifacts we intend to correct, such as small gaps at the edges of the garments.”

The team has several possible applications in mind, online shopping being the most obvious, with a side benefit being it could prevent a large amount of fashion waste as buyers could have greater assurance about their purchase and clothing could even be made on demand rather than at scale. But virtual try-on could also find use in the world of online video, including conferences and production environments, where appearance is important, and budgets are tight.

Papers

Toby Chong, I-Chao Shen, Nobuyuki Umetani, and Takeo Igarashi, "Per Garment Capture and Synthesis for Real-time Virtual Try-on," ACM Symposium on User Interface Software and Technology (UIST ’21): October 8, 2021, doi:10.1145/3472749.3474762.

Link (Publication )

)

Related links

- User Interface Research Group

- Department of Creative Informatics

- Graduate School of Information Science and Technology